Generative AI is at its peak. Using it has become so normal that content originating from it can be found all over social media, the web, and, in fact, the entire internet. Now, no doubt AI has brought efficiency, but that comes with the danger of deepfake.

So many images circulate on the internet. While it is not inherently bad, things go wrong when people use it with ulterior motives. For instance, they post someone’s picture without their permission to spread misinformation or conceal their identity for fraud or cybercrime purposes.

Seeing the seriousness of the matter, tech giants agree to work on making AI responsible.

Of these, Google took the initiative by developing a tool to identify whether an image is deepfake or original.

Google’s New AI Image Detection Tool

Google developed a new AI image detection tool called “SynthID” with its other division, DeepMind. The tool works based on two AI models: One embeds the watermark in the AI image while the other identifies it.

However, the watermark that the tool embeds is not visible.

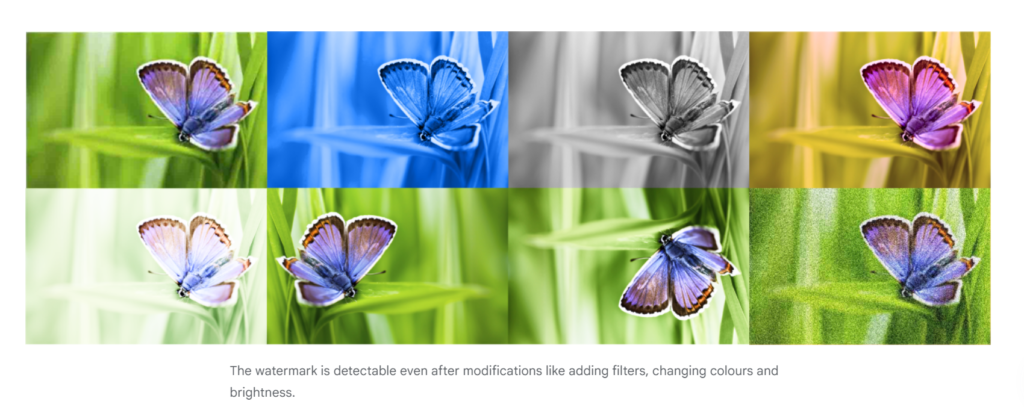

Unlike traditional watermarks, SynthID will embed a digital watermark deep down into the pixels of the image generated. This will be strong enough that even when editing the image, such as cropping, resizing, or enhancing, it won’t vanish.

image source: Google DeepMind

However, Google didn’t promise that SynthID is perfect. If there are instances of highly manipulative techniques, chances are the tool doesn’t reach up to that mark.

However, this is the first step, which the tech giant will keep improving. So that in future, it becomes able to combat even extreme manipulations and adapt with time and technology.

You may also like: Google’s Gemini is incapable of generating people’s images. Here’s why!

How does SynthID identify the AI image?

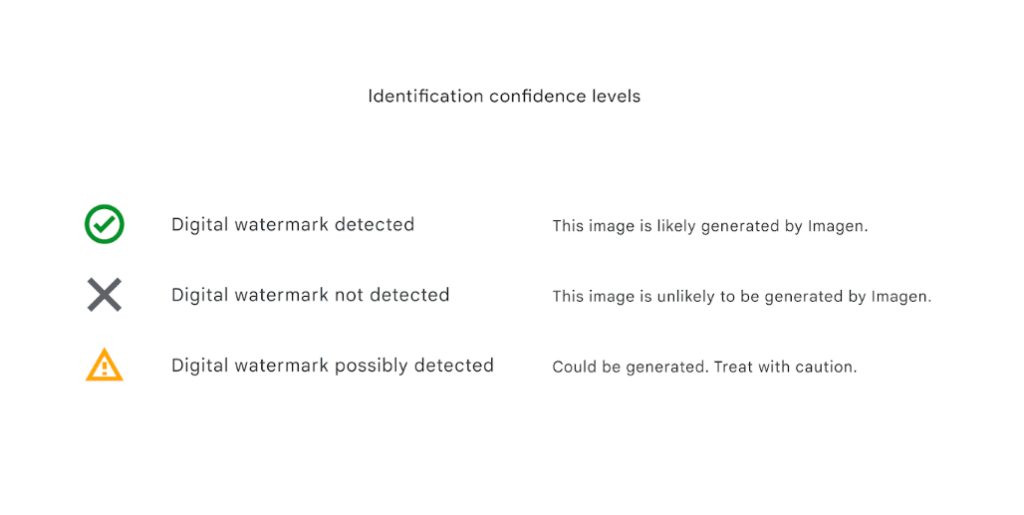

SynthID identifies images in three levels based on confidence. This means that when you ask the tool for detection, it can give you three responses after processing. That is-

- Images are generated from AI (this is when the tool is one hundred per cent sure.)

- The given image is not an AI product.

- A mixed response (means the tool is not sure if the image was generated using AI, but it seems a little, so it ends up giving a warning.)

image source: Google DeepMind

Who can access SynthID?

For now, Google SynthID is in beta testing. So, only a few Google Cloud customers can use it via Vertex AI’s text-to-image model, Imagen. That means the images you want to detect have to be created using Imagen rather than from other AI image-generation tools. Simply put, if an image source is a tool like Dall E or Midjourney, Google’s SynthID won’t work.

However, Google plans to expand it soon. So, in the future, we can expect SynthID’s integration into Google’s other products and with third-party AI models if the deal happens.

Thank you for reading! We hope you found the information helpful. For more such articles, you can follow our blog.

A team of digital marketing professionals who know the Art of making customers fall in LOVE with your brand!

Pingback: Google Relaunches AI Image Creation with New Safeguards - Bitvero Digital Marketing