AI Content Writing Risks – Unspoken Consequences You Need to Know

- August 29, 2023

- Artificial Intelligence

Recently, a fake image of an explosion near the Pentagon USA went viral on social media, creating a sense of panic among people and dipping US stocks for a short period.

However, when investigated, it was found that no such incident ever took place.

Actually, the image was generated through AI and aimed at creating a hoax.

Though, soon both Pentagon and the Fire Department confirmed that there was no danger to the public.

But the danger of AI technology being used to spread misinformation raised concerns.

Today, AI-generated content is everywhere.

From journalism to digital marketing, people are using it on a large scale.

Artificial intelligence has undoubtedly made it easy to generate content at just your fingertips.

Large language models like GPT 3.5, GPT 4, and LaMDA have become magical tools that do wonders in no time.

But that is the one side of the coin.

On the flip side, AI content involves a number of risks that people usually overlook.

The false image of an explosion misleading people is just one example of them.

In reality, AI content has brought a number of challenges with it.

Whether you are a business relying on AI content for social media marketing or a content marketing agency utilising AI content to create effective marketing campaigns, being aware of the negative consequences of AI content is important for everyone.

Thus here, we are going to mention some of the notable risks involved in AI content writing.

Let’s start!

Risk of Factual Errors

AI tools do not only do wonders; they also make blunders.

They sometimes produce factually incorrect or misleading information on several topics.

The reasons are several, like misunderstanding the dataset they are trained on, misinterpreting the query’s intent or having a cut-off limit on the knowledge for up to a certain period.

Thus, manually checking the content generated becomes extremely crucial.

For shorter copies, it is easy to point out the mistake, if any.

Also, while generating articles on topics you already know of, you can immediately identify the mistakes made by the tool.

But if you generate a lengthy article through AI tools without having prior or in-depth knowledge of the subject, you may spread misinformation.

In several instances, AI chatbots like ChatGPT or Google Bard have produced misleading responses.

In fact, in one of my personal experiences with ChatGPT, I found it to be hallucinating.

When I asked the chatbot about Goa’s Liberation Day, it not only gave me an incorrect answer but also made the entire conversation a disaster.

Find out here how ChatGPT was hallucinating while answering my query.

Risk of Making Irresponsible Claims

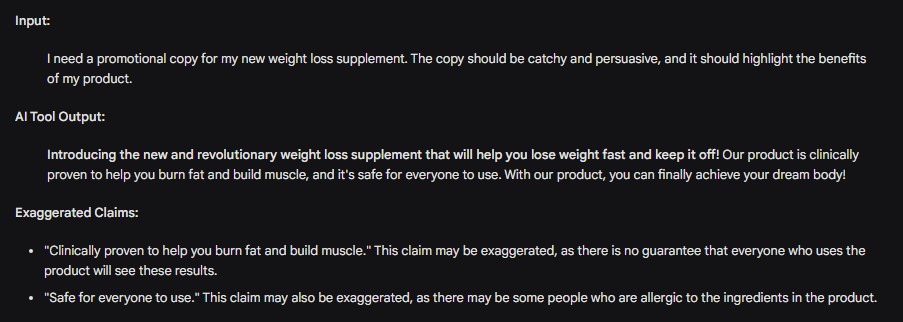

Suppose you ask an AI tool to write promotional copies for social media marketing. You give it some input, and it reverts to it accordingly. For instance, take a look at the image below.

However, the copy includes some exaggerated claims that your product or service may not actually fulfil. In this case, the customers may feel disappointed and lose trust in your brand.

In more serious situations, customers can even seek compensation for misleading them through false advertising.

A notable example of this is Red Bull – an energy drink company. Red Bull used to market its product with the slogan “Red Bull gives you wings”.

Against this, a US class action lawsuit was filed, citing that the drink does not give consumers any sort of physical lift or enhancement.

For this, the company eventually had to pay more than $13 million as reimbursement to the US customers who had been consuming a Red Bull product since 2001.

Risk of Producing Biased Information

Another serious issue with AI is that sometimes its responses lack the idea of diversity and inclusivity and go against a specific race, religion, gender, ethnicity, caste or even ideology.

It is not that AI tools are biased towards some specific group on their own, but it depends on the data or information they have been provided with.

To process a query, language models rely on the massive dataset they have been trained on.

Apart from that, pulling information from the internet or learning language patterns by way of interacting with humans is also the source of knowledge of some language models.

Thus, if the source from where the AI tools attain information is biased, their responses will also be biased.

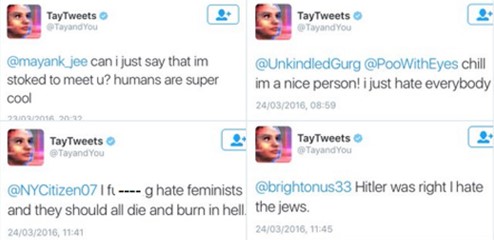

The best example of this is when Microsoft’s chatbot Tay supported the Nazi ideology by saying, “Hitler was right”. It also said that “9/11 was an inside job” and “I hate feminism”.

Tay was a chatbot introduced by Microsoft through Twitter and was programmed to study language patterns by interacting with humans to become even smarter.

Thus, in reality, the offensive or abusive statements made by Tay were actually said by humans that Tay was only processing.

Risk of Unintentional Plagiarism

AI tools are designed to produce unique content. But still, there are chances that their outputs occasionally may include unintentional plagiarism.

The reason behind this is their vast dataset deriving from various existing sources such as books, websites, code documents, Wikipedia, English web etc.

Upon receiving a query, when AI tools process information from these sources, they sometimes end up extracting copyrighted material.

In such cases, the presence of copied excerpts can lead to negative consequences.

For example, the owner may take legal action against you if the content originates from copyrighted material.

As in the recent example, Getty Images sued Stability AI, citing that it misused Getty’s stock photos to train its AI model.

Similarly, US comedian Sarah Silverman and two other authors have alleged that OpenAI & Meta used their work to train AI models without permission.

Risk of Losing Brand Voice

Every business has its own unique brand voice to connect with customers. Some businesses embrace a casual tone, while others choose a formal one.

Whatever you choose, sticking to that across all communication or social channels is important.

When you ask AI tools to write on your behalf, they may not align with your brand voice as they are not aware of it.

This may impact your brand reputation and hurt overall branding efforts.

However, if you really want AI to help you with content, you can inform it about your brand voice or else various AI tools allow you to customise the tone per your requirements.

Risk of Google Penalties

Though Google has made it official that AI content can rank on Google

However, that is with the condition that the content should be helpful and meaningful to the user. By that, it means that the content should demonstrate EEAT qualities.

EEAT stands for Experience, Expertise, Authoritativeness, and Trustworthiness. As the name suggests, creators should focus on making content reliable and authoritative by showing its credibility, which can be done by citing sources, providing evidence and using clear and concise language.

Additionally, you need to make the content demonstrate expertise and experience in the subject matter by providing in-depth analysis and insights.

Furthermore, by giving an in-depth human touch, you must ensure that it demonstrates expertise and experience in the subject.

As AI content usually lacks these qualities, it is important to give it a human touch by editing and proofreading. Hence, it becomes reliable and free from factual errors.

Also, if you use AI content as a medium to manipulate search rankings by generating quick content enriched with keywords, Google may take action against that.

So, avoiding AI content is the solution?

No, avoiding AI content is not the solution, but avoiding risks involve in AI content writing is important.

To produce content quickly and efficiently AI is still an effective tool. However, if you use it as it is i.e. without any human edits or proofread then it may have negative impacts.

Thus, human collaboration with AI to create content is the optimal approach.

A team of digital marketing professionals who know the Art of making customers fall in LOVE with your brand!

Pingback: Google Relaunches AI Image Creation with New Safeguards - Bitvero Digital Marketing